Editor’s Note: Since the launch of QHub in 2020, we have evolved it into a community-led open source project called Nebari. See our blog post on Nebari and visit nebari.dev for more information.

Today, we are announcing the release of QHub, a new open source project from Quansight that enables teams to build and maintain a cost-effective and scalable compute/data science platform in the cloud or on-premises. QHub can be deployed with minimal in-house DevOps experience.

See our video demonstration.

Deploying and maintaining a scalable computational platform in the cloud is difficult. There is a critical need in organizations for a shared compute platform that is flexible, accessible, and scalable. JupyterHub is an excellent platform for shared computational environments and Dask enables researchers to scale computations beyond the limits of their local machines. However, deploying and maintaining a scalable cluster for teams with Dask on JupyterHub is a fairly difficult task. QHub is designed to solve this problem without charging a large premium over infrastructure costs like many commercial platform vendors do OR requiring the heavy DevOps expertise that a roll-your-own solution typically does.

QHub provides the following:

Each of these features are discussed in the sections below.

QHub also integrates many common and useful JupyterLab extensions. QHub Cloud currently works with AWS, GCP, and Digital Ocean (Azure coming soon). The cloud installation is based on Kubernetes but is designed in a way that no knowledge of Kubernetes is required. QHub On-Prem is based on OpenHPC and will be covered in a future post. The rest of this post will cover the cloud-deployed version of QHub.

QHub does this by storing the entire configuration of the deployment in a single version-controlled YAML file and then uses an infrastructure-as-code approach to deploy and redeploy the platform seamlessly. This means that any time a change is made to the configuration file, the platform is redeployed. The state of the deployed platform is self-documenting and is always reflected in this version-controlled repository.

Overview of the infrastructure-as-code workflow

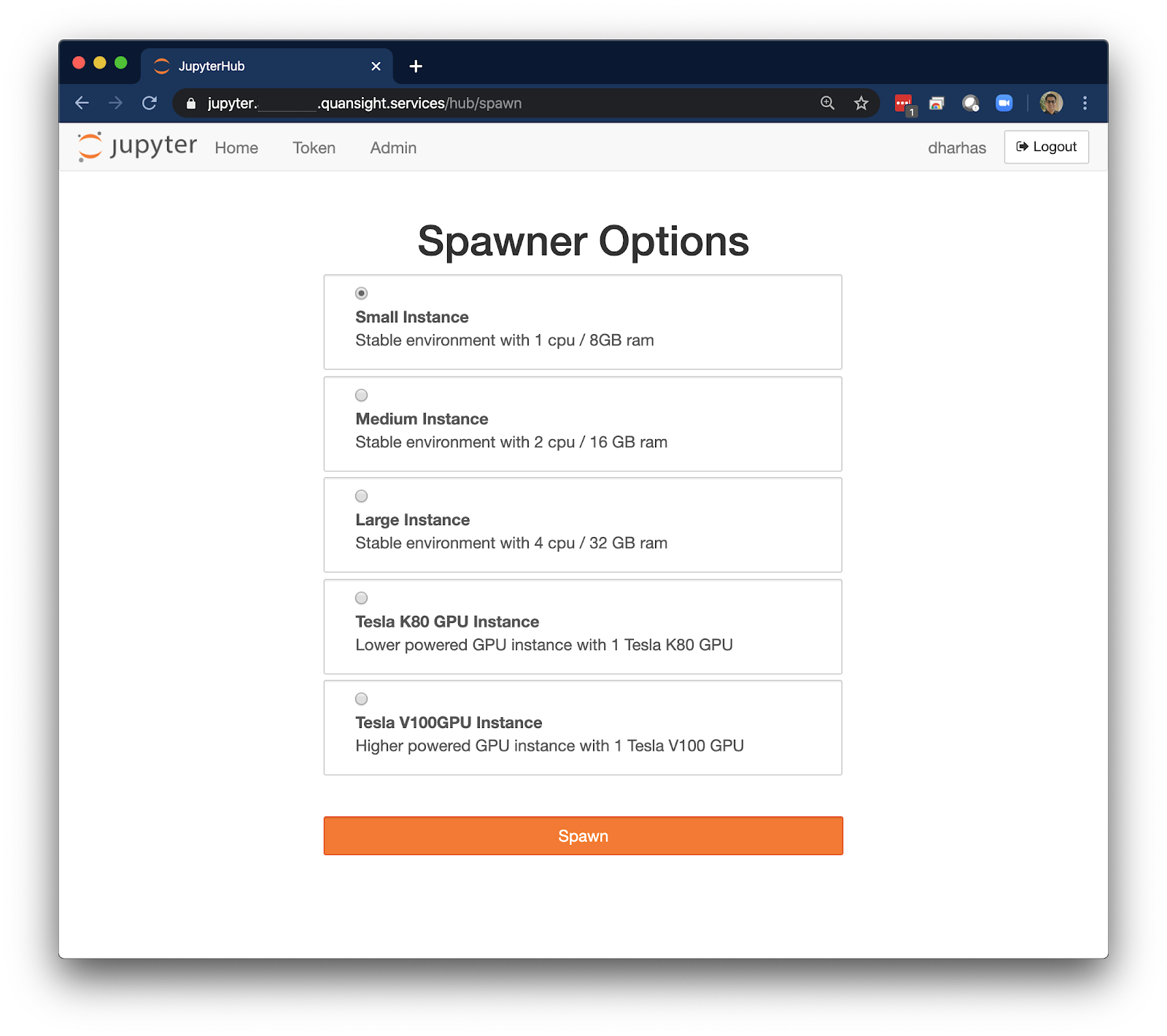

In addition, since the deployment is based on Kubernetes, new pods are spun up automatically as more users log in and resources are required. These resources are scaled back down once they are no longer needed. This allows for the cost-effective use of cloud resources. The base infrastructure costs of a QHub installation can vary from $50-$200 depending on the cloud provider, storage size, and other options chosen. The incremental cost of each user that logs in can vary from approximately $0.02-$2.50 per hour based on the instance types they choose. User pods are scaled-down when they log out or if their session has been idle for a specified time (usually 15-30 mins).

Specifying the instance type to spawn with QHub

While JupyterLab is an excellent platform for data science, it lacks some features that QHub accommodates. JupyterLab is not well suited for launching long-running jobs because the browser window needs to remain open for the duration of the job. It is also not the best tool for working on large codebases or debugging. To this end, QHub provides the ability to connect via the shell/terminal and through remote development environments like VS Code Remote and PyCharm Remote. This remote access connects to a pod that has the same filesystems, Dask clusters types, and environments that are available from the JupyterHub interface. It can be used effectively for long-running processes and more robust development and debugging. We will explore this capability in a future blog post about KubeSSH.

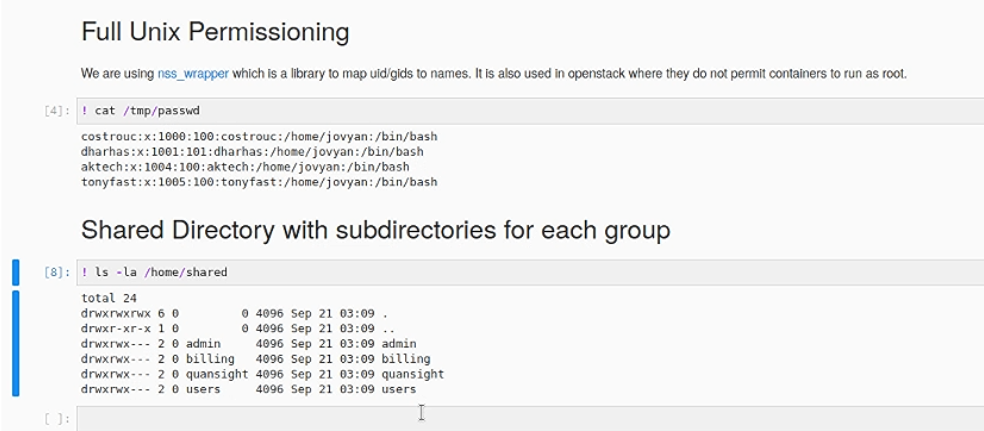

One of the deficiencies in most JupyterHub deployments is the inability to have controls over-sharing. QHub enables a shared directory between all users via nfs and set permissions associated with every user. In addition, QHub creates a shared directory for each declared group in the configuration allowing for group-level protected directories shown in the Figure below (bottom cell).

To achieve this robust permissioning model we have followed openshift’s approach on how to control user permissions in a containerized ecosystem. QHub configures JupyterHub to launch a given user’s JupyterLab session with set uid and primary/secondary group ids. To get the uid and gid mapping we use nss_wrapper (must be installed in the container) which allows non-root users to dynamically map ids to names. In the image below (top cell) we show the given Linux mapping of ids to usernames. This enables the full Linux permission model for each JupyterLab user.

Example of standard Linux-style permissioning in QHub

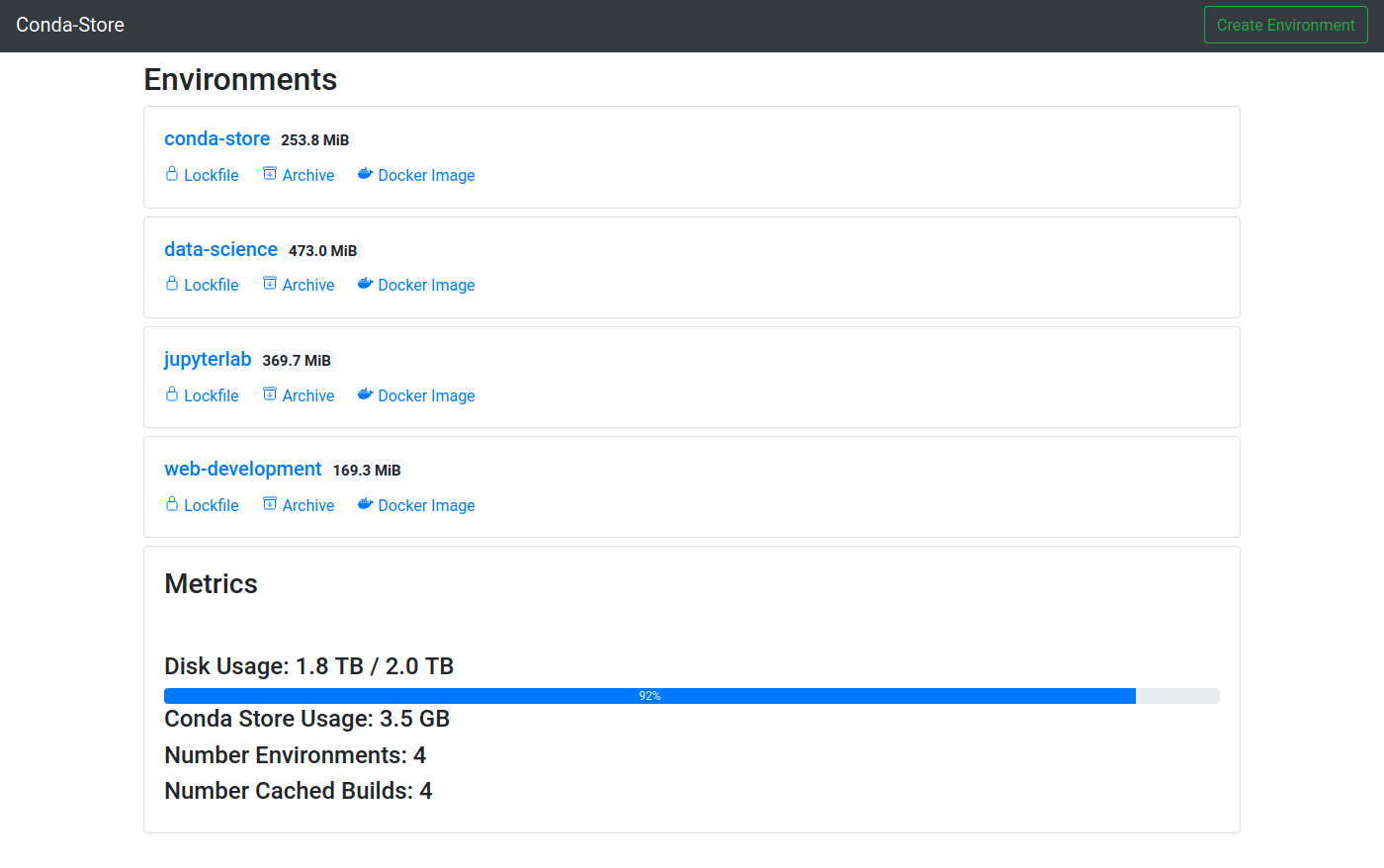

Data Science environments can be maddeningly complex, difficult to install, and even more difficult to share. In fact, it is exactly this issue that pushes many teams and organizations to look at centralized solutions like JupyterHub. But first, what do we mean by a Data Science environment? Basically, it is a set of Python/R packages along with their associated C or Fortran libraries. On the first pass, it seems logical to prebake these complex environments into the platform as a set of available options users can choose from. This method breaks down quickly because user needs change rapidly and different projects and teams have different requirements.

Using conda-store to manage cloud environments

Allowing end-users to build custom ad-hoc environments in the cloud is a hard problem and Quansight has solved it through the creation of two new open-source packages, conda-store and conda-docker. We will describe these packages in more detail in a future blog post. QHub’s integration with these packages allows for both pre-built controlled environments as well as ad-hoc user created environments that are fully integrated with both JupyterLab as well as the autoscaling Dask compute clusters.

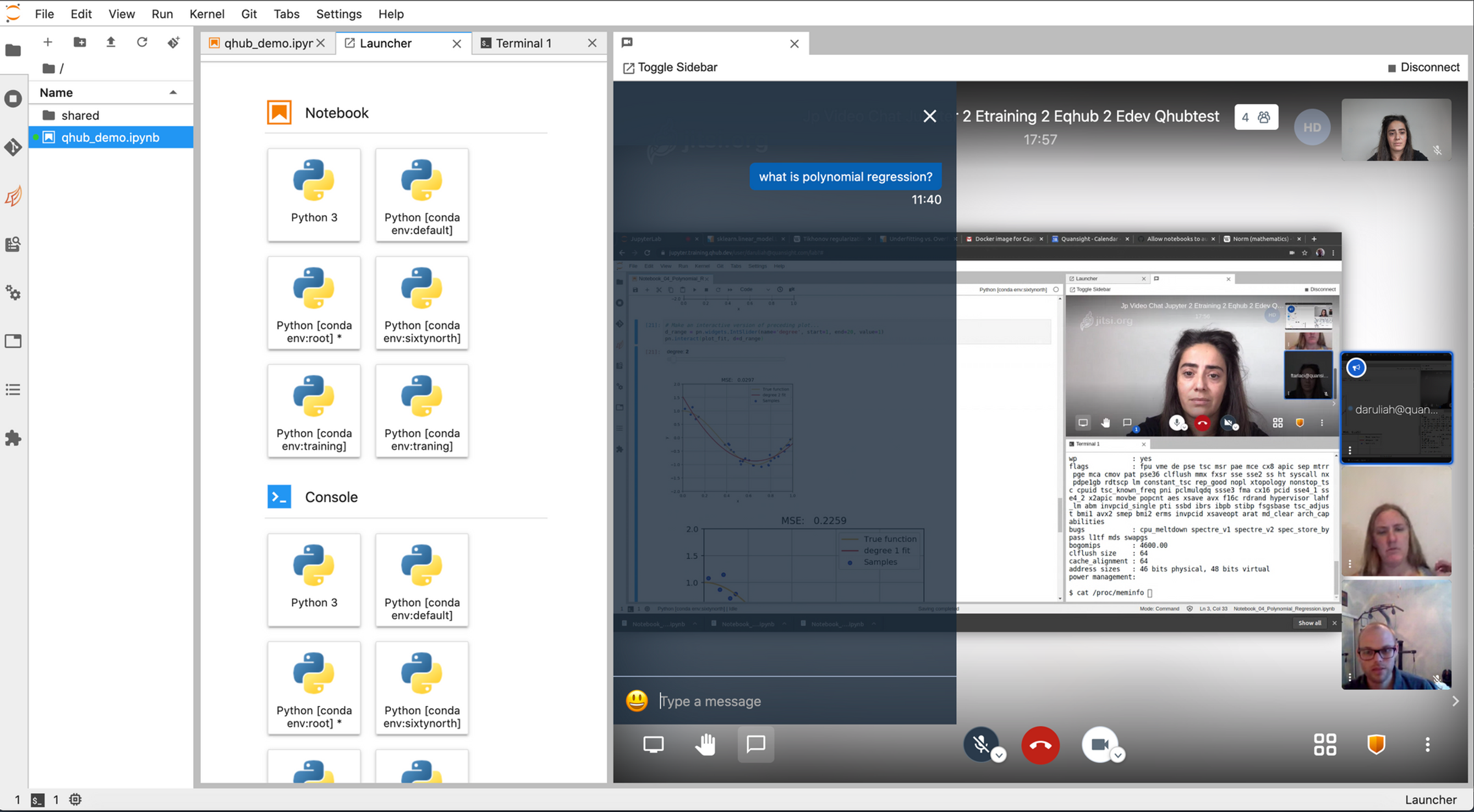

Jitsi is an open-source video-conferencing platform that supports standard features of video-conferencing applications (e.g., screen-sharing, chat, group-view, password protection, etc.) as well as providing an end-to-end encrypted self-hosting solution for those who need it. Thanks to the Jupyter Video Chat (JVC) extension, it is possible to embed a Jitsi instance within a pane of a JupyterLab session. The ability to manage an open-source video-conferencing tool within JupyterLab—along with a terminal, notebook, file browser and other conveniences—is remarkably useful for remote pair-programming and also for teaching or training remotely.

Using the Jupyter Video Chat plug-in within a JupyterLab session

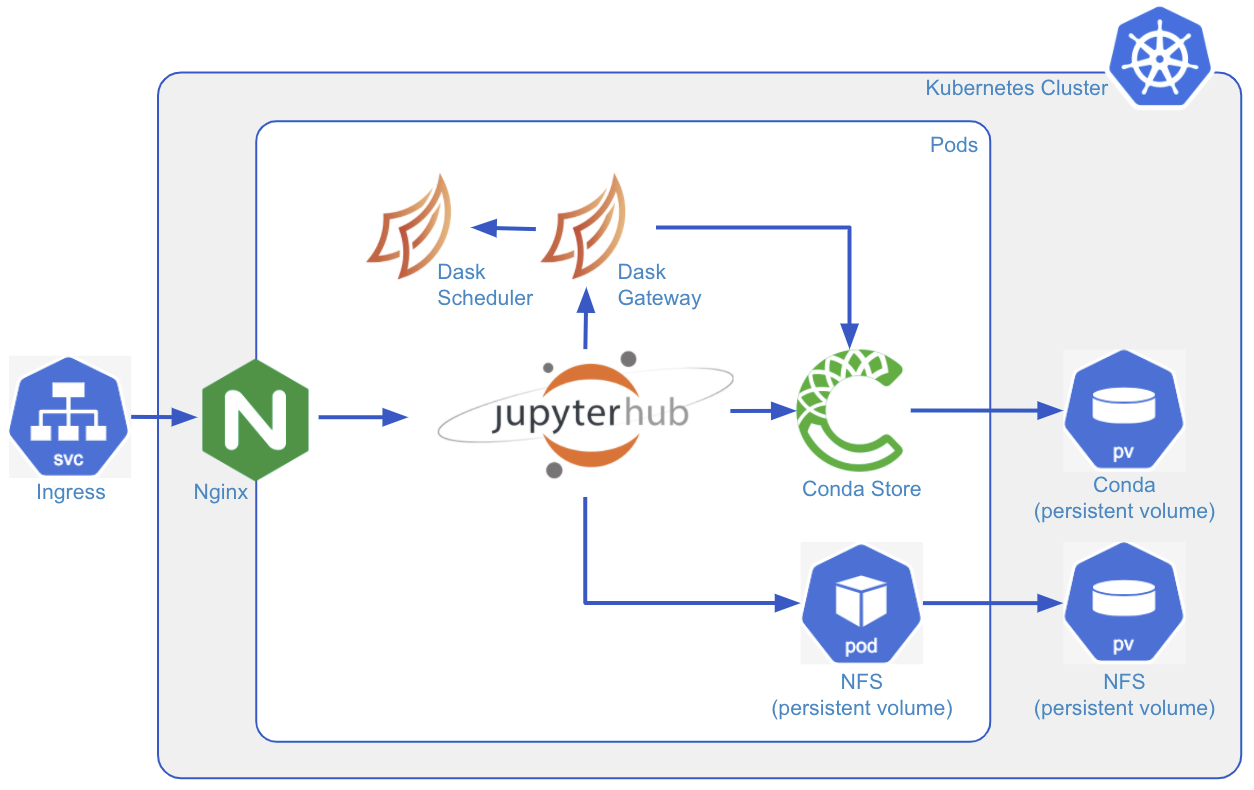

At its core QHub can be thought of as a JupyterHub Distribution that integrates the following existing open-source libraries:

Overview of the QHub stack

Quansight invites you to try QHub and give us your feedback. We use QHub in production with several clients and also use it as our internal platform and for Quansight training classes. We have found it to be a reliable platform but as a new open source project, we expect that you will find rough edges. We are actively developing new features and we invite you to get involved by contributing to QHub directly or to any of the upstream projects it depends on.

Documentation: https://nebari.dev (formerly https://qhub.dev)

Repository: https://github.com/nebari-dev/nebari (formerly https://github.com/Quansight/qhub)

Discussion: https://github.com/orgs/nebari-dev/discussions (formerly https://gitter.im/Quansight/qhub)

QHub is a JupyterHub distribution with a focused goal. It is an opinionated deployment of JupyterHub and Dask with shared file systems for teams with little to no Kubernetes experience. In addition, QHub makes some design decisions to enable it to be cross-platform on as many clouds as possible. The components that form QHub can be pulled apart and rearranged to support different enterprise use cases. If you would like help installing, supporting, or building on top of QHub or JupyterHub in your organization, please reach out to Quansight for a free consultation by sending an email to connect@quansight.com.

If you liked this article, check out our new blog post about JupyterLab 3.0 dynamic extensions.

Update: As we noted above, QHub is now Nebari. See the more recent post on Nebari and nebari.dev for more.